In today’s rapidly advancing technological landscape, businesses are eager to harness the power of generative AI to enhance their operations. However, transitioning from a simple prototype to a fully functional production system presents unique challenges. In a recent podcast episode, Michelle Avery, Group Vice President of AI at WillowTree, delved into this topic, offering valuable insights.

Michelle Avery leads AI initiatives at WillowTree, a digital product agency known for crafting innovative solutions. With over 17 years of experience in engineering, AI, FinTech, and robotics, she is passionate about developing responsible, production-ready AI applications that address real-world business problems.

This article distills the key points from the podcast, focusing on the journey from prototyping generative AI solutions to deploying them in production environments. We’ll explore the importance of identifying genuine business problems, the role of data accessibility, and strategies to ensure user acceptance. By understanding these aspects, businesses can better navigate the path from AI concept to operational success.

Understanding Generative AI and the Role of Prototyping

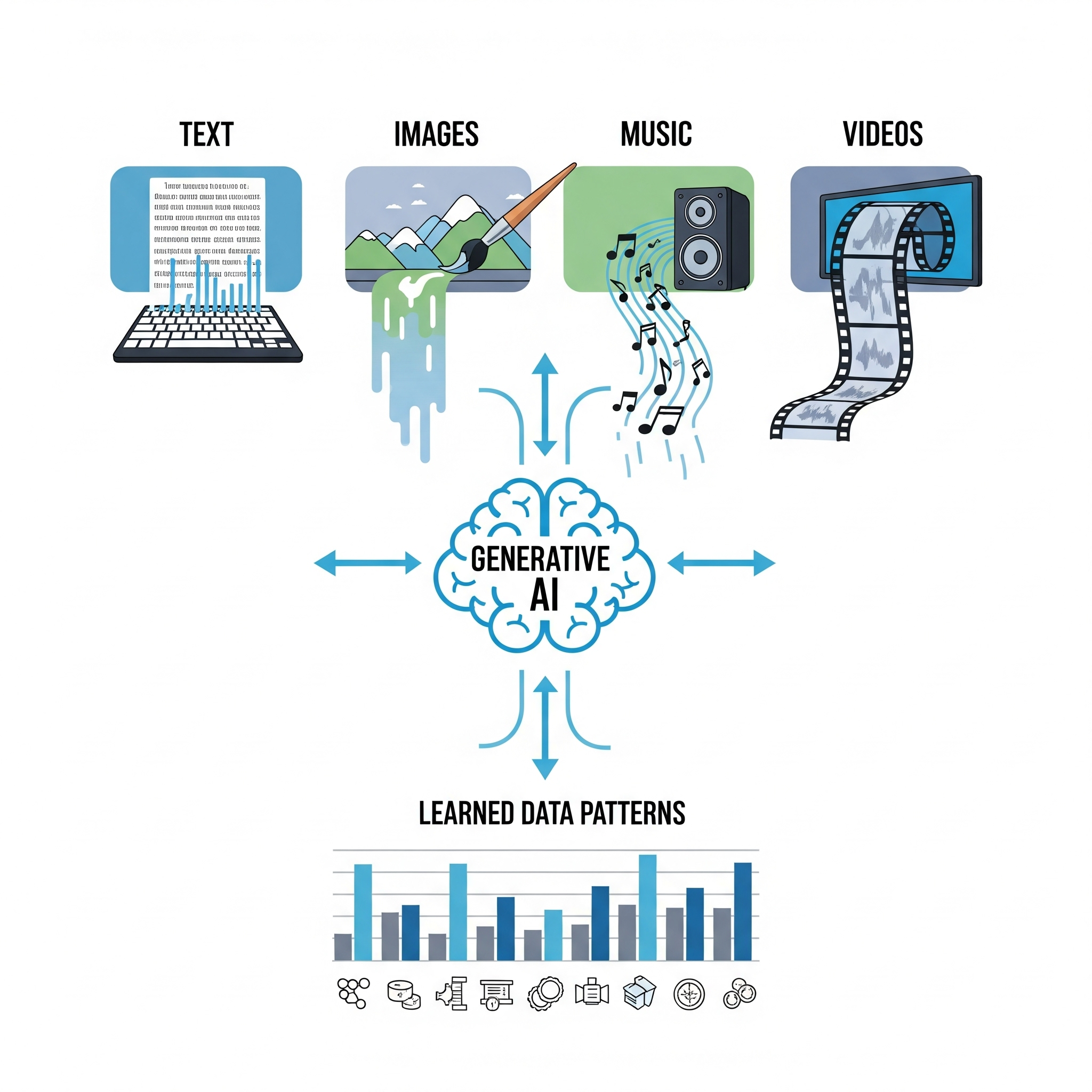

What Is Generative AI?

Generative AI refers to systems that can create text, images, code, or other content based on patterns in data. Think of it like giving software a spark of creativity—it generates something new based on what it’s learned. In business, it’s opening new doors for customer service, content creation, data analysis, and more.

However, this doesn’t mean it fits everywhere. Michelle Avery points out that many companies rush into AI because they have a budget for it, not because it solves a real need. That’s where problems start. The real value of generative AI comes when it’s used to solve actual pain points—not just to say you’re “using AI.”

Why Prototyping Matters in Gen AI

Prototyping is how companies test the waters before jumping in. Instead of building a full system upfront, they create a small version to answer one big question: “Can this work for us?”

Michelle stresses that prototyping isn’t just about building something cool—it’s about learning. It helps teams figure out if generative AI is the right tool, how well it performs, what it costs to run, and whether it’s scalable. With AI moving fast, skipping this step can lead to wasted time and money. It’s like trying to build a house without checking if the ground is stable.

Prototypes also give teams something real to show—something they can test, improve, and share with others inside the company. That early feedback is often more valuable than the tech itself.

The Prototyping Process: Ideation to Execution

Starting with the Business Problem

A good prototype starts with a real problem, not with the technology itself. Michelle Avery highlights that projects fail when teams begin with “We have generative AI—where can we use it?” instead of asking, “What problem are we trying to solve?” A solution-first mindset has a low success rate, while focusing on the business challenge greatly improves the odds.

Every prototype should connect to measurable outcomes. Whether it’s cutting operational costs, improving user experience, or increasing efficiency, knowing what success looks like ensures that the project delivers actual value rather than just a flashy experiment.

Designing an Evaluation-Driven Prototype

A prototype must have a clear purpose. Michelle emphasizes defining “what good looks like” before writing a single line of code. This helps teams know if the idea works and whether it’s worth scaling. Think of it as drawing a map before starting a journey—you know exactly where you’re headed and how to measure progress.

At the same time, innovation must be balanced with practicality. A prototype should test the riskiest assumptions first instead of trying to solve every possible problem. It’s better to validate one strong use case than to spread resources thin across many ideas.

Technical Considerations in Early Prototyping

Data is often the biggest roadblock. Companies may struggle to access the right systems or integrate data sources that the AI model needs. Without solving this early, even the best prototype can’t move forward. Michelle’s team often works closely with clients to find ways to test ideas using available data or create mock datasets when needed.

Building a lightweight but functional model is key. The goal isn’t to make it perfect but to create something tangible that can answer critical questions: Does this work? How well? Is it worth scaling? A quick, focused prototype saves time and avoids costly mistakes later.

Transitioning from Prototype to Production

Recognizing When a Prototype Is Ready

A prototype doesn’t magically turn into a product. You need proof it works in real conditions. That proof often comes from user feedback. Michelle Avery explains the importance of collecting real input from users—not just on whether the AI performs, but whether it’s useful and fits into their workflow.

That feedback helps define success. It could be task completion rates, response accuracy, or time saved. If users rely on it and the results are consistent, it’s a good sign the prototype is ready to move forward. But without clear metrics, you’re guessing.

Bridging the Technical Gaps

A prototype is often built to learn, not to last. Michelle notes that things like logging, monitoring, security layers, and a polished user interface are often missing from early builds—and that’s okay. Once a prototype proves itself, those pieces can be added as part of scaling.

Another reason for building a functional prototype first is cost clarity. Running the model gives teams a feel for infrastructure needs, how often it’ll be used, and where extra expenses may show up. That makes it easier to plan the budget for production.

Addressing Business and Operational Challenges

Moving to production isn’t just a tech decision—it involves risk and company-wide alignment. Michelle’s team often kicks off this phase with a “trustworthy AI” workshop. The idea is to bring together product owners, legal, marketing, and engineering to figure out what risks matter most—like data security or bad output going public.

For internal tools, you might care less about outside abuse. But if users from the public can interact with the AI, you need guardrails. These early decisions shape architecture, cost, and risk later on.

Stakeholder Engagement and Education

Involving a Cross-Functional Team

Building a generative AI product isn’t just the job of engineers. Michelle Avery emphasizes the need to bring in voices from across the company—legal, marketing, product, branding—right from the start. Why? Because AI doesn’t live in a vacuum. Legal needs to weigh in on compliance, marketing wants to protect brand tone, and product teams know what users expect.

To make this work, communication has to be simple and direct. Not everyone understands AI jargon—and they don’t need to. Michelle’s team runs workshops that explain risks using real examples from similar industries. This helps everyone understand what’s possible, what could go wrong, and how their input matters.

Setting Realistic Expectations for AI Solutions

One of the biggest challenges? Breaking the myth that Gen AI is “just a bot.” Many people still picture a chatbot with canned replies. Michelle pushes back on that idea. The tech is more flexible—but only if people stop limiting it to question-answer boxes.

To help teams think bigger, Michelle shares mockups and use cases that go beyond chat. This opens up conversations about different interfaces, smarter workflows, and better outcomes. It also helps shift the focus from the tech to the user experience.

Setting clear goals is just as important. Teams need to define what success looks like upfront. Is it faster support response? Higher customer satisfaction? More accurate recommendations? By tying technical results to business value, companies can track performance and avoid hype-driven disappointment.

Measuring Success: From Evaluation to ROI

Defining Key Performance Indicators (KPIs)

Measuring success in generative AI starts with knowing what to measure—and not every metric needs to be technical. Michelle Avery explains that while system performance matters, it’s the business impact that makes or breaks a project.

Think about KPIs like time saved, cost reduction, user adoption, or satisfaction scores. These connect the AI’s output to real outcomes. On the technical side, you’ll want to monitor accuracy, error rates, or latency—but those only matter if they support the bigger goal.

And when it comes to ROI, don’t just guess. A working prototype can show you how often the tool will be used, what resources it consumes, and what guardrails are needed. That lets you forecast actual costs, not just ballpark estimates.

Iteration and Continuous Improvement

The first version is never the final one. Michelle stresses the value of designing your system to collect feedback from the start. Users need to know they’re working with AI—and they should have an easy way to say, “This didn’t work for me.”

That input helps teams spot problems early and tweak responses, workflows, or interface elements. It’s not just about fixing bugs—it’s about learning how users interact with the system and making it better over time.

As feedback loops tighten and performance improves, the prototype becomes a full product. The process doesn’t stop at launch—it’s an ongoing cycle of test, learn, and adjust.

Conclusion: Turning AI Ideas into Real Business Wins

Prototyping generative AI isn’t just a technical exercise—it’s a business strategy. As Michelle Avery shared, success comes from solving real problems, involving the right people early, and learning fast. When teams align on purpose, evaluate based on outcomes, and stay flexible enough to improve, the path from prototype to production becomes much clearer.

Whether you’re just starting or refining an existing AI initiative, focus less on the hype and more on the impact. Ask better questions. Define success early. Keep users in the loop. And most importantly, treat your prototype as a learning tool—not a final product.

Because in the end, the best AI solutions aren’t just built—they’re earned through thoughtful execution.